Image by Stanford

Image by Stanford

Summary

GPU implementation to compute word similarities using the word embedding model of GloVe, which contains $2.2\cdot10^6$ words.

Given a word, the program computes the cosine distance between all other words in the database, where the cosine distance is defined as follows:

$$ cosDist(\vec A, \vec B) = \frac{\vec A \cdot \vec B}{|\vec A||\vec B|}$$

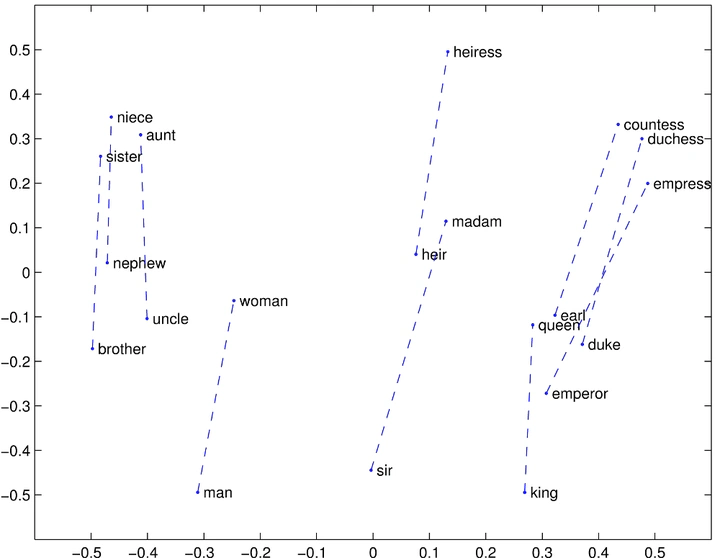

Because we are using angles to compute the similarities, we can also compute pairs of words with similar relationships. An example is if we search for king - queen we get (among others) men - women.

As the program will compute this distance between all other words, a GPU sorting and filtering algorithm have been implemented. It can retrieve the N words with the highest similarity.

Results

The final implementation, making use of warp synchronization and minimizing memory usage in the sorting, can compute the all similarities in under 10ms.